Defining Scalability

Learning Objectives

- You know what the term scalability means.

- You know why scalability is needed.

- You know of potential consequences of failing to scale.

- You know of vertical and horizontal scaling and know their differences.

- You know of advantages and disadvantages of vertical and horizontal scaling.

Accommodating for growth

As web applications become more popular, the amount of data and the number of users accessing the applications can grow rapidly. The growth can lead to bottlenecks, slower response times, and potential downtime, all of which can negatively influence user experience.

Even small delays — not to mention downtime — can have a significant impact on user behavior. For example, a study by Akamai found that a 100-millisecond delay in website load time can reduce conversion rates by 7 percent, and a two-second delay can increase bounce rates by 103 percent. The conversion rate is the percentage of visitors who complete a desired action, such as making a purchase. Bounce rate, on the other hand, is the percentage of visitors who leave a website after viewing only one page.

See Akamai Online Retail Performance Report: Millisecond Are Critical.

Scalability is a characteristic of systems, networks, and applications that defines their ability to accommodate increasing workloads without compromising performance. As demand rises — whether through more users, higher volumes of data, or increased request rates — systems should maintain efficiency, responsiveness, and reliability.

Scalability has been defined in various ways, but the common theme is that scalability is about the ability to grow while maintaining performance. Here are a few definitions:

-

Andresen et al. (1996): “We call a system scalable if the system response time for individual requests is kept as small as theoretically possible when the number of simultaneous HTTP requests increases, while maintaining a low request drop rate and achieving a high peak request rate.”

-

Fox et al. (1997): “By scalability, we mean that when the load offered to the service increases, an incremental and linear increase in hardware can maintain the same per-user level of service.”

In addition to being able to grow while maintaining performance, scalability is also about being able to handle increased demand in a cost-efficient manner.

- Hall et al. (1996): “By scalability we mean that the proposed protocols for data delivery are cost-effective even when there are a very large number (100’s, 1000’s, even tens of thousands) of destinations that the data needs to be delivered to.”

Scalability is not only about handling increased demand but also about handling fluctuating demand. Applications may experience spikes in demand, which can be temporary or long-lasting. Increase in demand may be sudden, e.g., due to to a marketing campaign, news coverage, or a social media post. If the application cannot handle the traffic, it may lead to lost sales, decreased user satisfaction, and damage to the reputation of the company.

As an example, in 2018, Amazon’s Prime Day did not go as planned. During the company’s biggest sales event of the year, their website experienced downtime due to the increased traffic. Estimates on lost sales have started from 72 million USD. Appropriately, the event of a sudden spike in demand has even been given names such as the hug of death and the slashdot effect.

While the above examples mostly highlight the need to meet increasing demand, scalable applications should also be able to scale down when demand decreases. This is especially important when resource costs are based on usage. For example, if an application is hosted on a cloud platform or on on-premises servers that are billed based on usage, the cost of running the application can be reduced by scaling down when demand decreases.

Vertical and horizontal scaling

Classically, scalability is divided into vertical and horizontal scaling, where vertical scaling refers to adding more resources to the server, while horizontal scaling refers to adding more servers to distribute the load. Both come with their advantages and disadvantages.

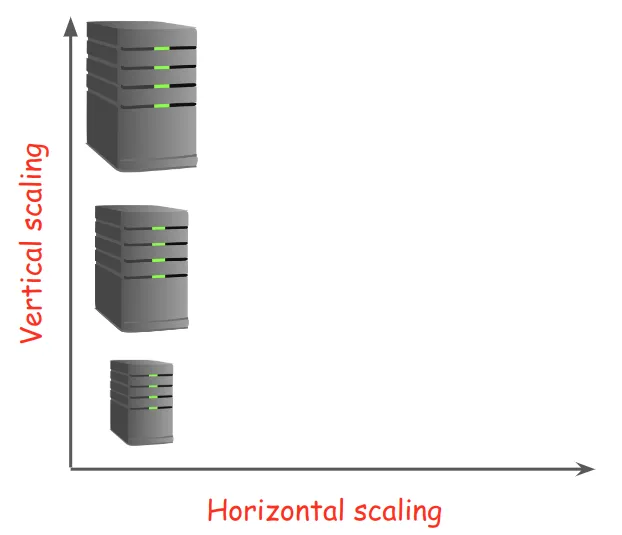

Vertical scaling

Vertical scaling means adding more resources to the current server. This includes upgrading hardware resources such as the number of available processors, the amount of available memory, or the size of the disk, which can have an impact on the performance of the server.

By adding more resources, the server can handle more requests, which in turn improves the processing power of the single server (Fig. 1).

In practice, however, there are limitations to scaling vertically:

- There is a physical limit to the amount of CPU, memory, and disk that can be added to a single server. This means that at some point, the server cannot be scaled further.

- The cost of upgrading hardware can be high, especially at the higher end where adding more resources can require specialized and expensive hardware.

In addition, vertical scaling may require downtime, although techniques like live migrations and hot-swappable components can be used to minimize the downtime.

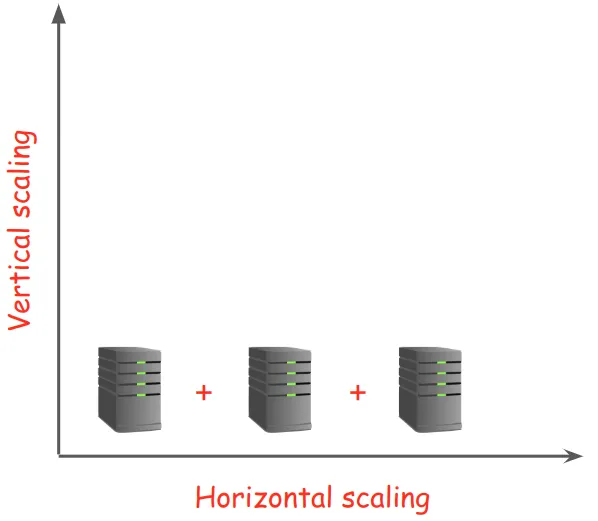

Horizontal scaling

Horizontal scaling means adding more servers to handle the workload (Fig. 2). As a classic example of horizontal scaling, take a look at the NASA Home Page from late 1996. To meet the global demand of seeing updates on the Mars Pathfinder mission, NASA mirrored the contents of their homepage to a number of servers, and linked users to those servers.

In a more modern web application context, distributing the workload over multiple servers is done using a load balancer, which distributes incoming requests to the servers. We discuss load balancing in more detail in later parts.

The advantages of horizontal scaling include ease and cost, as adding more servers is typically easier and less expensive than upgrading hardware. Horizontal scaling can be done without downtime, as new servers can be added to the pool of servers without taking the system offline. It can also be more cost-effective, as it allows for the use of commodity hardware that is cheaper than specialized hardware.

However, horizontal scaling also comes with its challenges:

- It requires a mechanism for distributing the incoming requests to the servers (i.e., load balancing).

- It requires a mechanism for handling state (e.g. centralized database or distributed cache), as the state of the application needs to be shared between the servers.

- It requires a mechanism for handling failures, as the failure of a single server should not bring down the entire system.

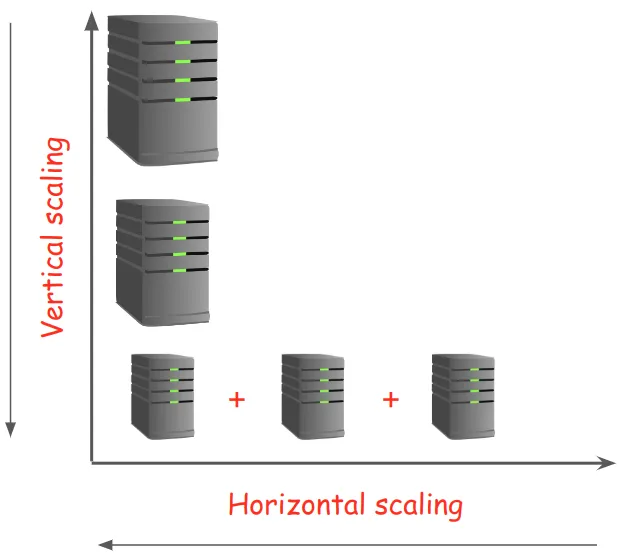

Vertical and horizontal scalability are not mutually exclusive, and they can be used together to match the needs of a system. As an example, a system could have a part that requires high-end GPUs for computationally heavy tasks, and a part that requires a large number of servers to handle more generic incoming requests.

Adjusting to demand

While vertical and horizontal scalability are typically discussed in the context of meeting increasing demand, they can also be used to adjust to fluctuating demand. In such a case, the resources of the server and the number of the servers would be increased or decreased as needed (Fig. 3).

This is more common in cloud environments where servers are provisioned on-demand and can be scaled up or down based on the current demand. In such environments, the cost of running the system can be optimized by only using the resources that are needed at any given time.