Defining Artificial Intelligence

Learning Objectives

- You can explain what intelligence means and compare different definitions of artificial intelligence.

- You can discuss challenges in defining AI, including the “AI effect.”

- You can distinguish between weak AI and strong AI, and reflect on critiques of strong AI.

What is intelligence?

According to the Merriam-Webster dictionary, intelligence is “the ability to learn or understand or to deal with new or trying situations,” and “the ability to apply knowledge to manipulate one’s environment or to think abstractly as measured by objective criteria (such as tests).”

Breaking this down, intelligence involves several interrelated capabilities. It includes learning from experience — adapting behavior based on past events and feedback from the environment. It encompasses applying knowledge in new situations — transferring what has been learned to unfamiliar contexts rather than simply repeating memorized responses. It requires reasoning, problem-solving, and flexible adaptation — thinking through challenges and adjusting approaches as circumstances change.

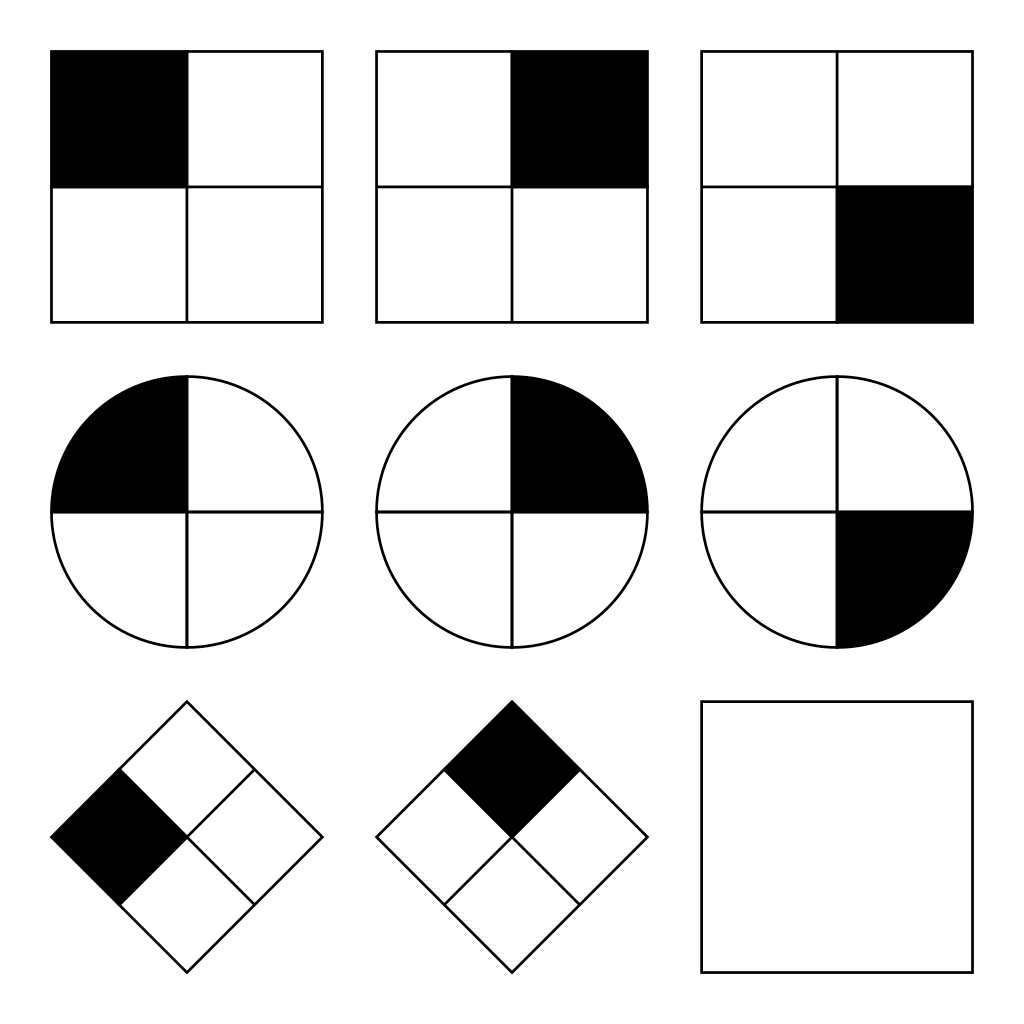

Traditionally, intelligence has been measured through tests of cognitive ability that assess reasoning, language comprehension, or pattern recognition. Figure 1 shows a problem from Raven’s Progressive Matrices, a widely used test of abstract reasoning. In this test, you must identify the underlying pattern in a series of shapes and determine which shape logically completes the sequence.

Author: Life of Riley (CC BY-SA 3.0).

Intelligence tests like these face substantial criticism. They often overlook cultural contexts and emotional dimensions of intelligence that may be equally important for successful functioning in the world. Emotional intelligence, for instance, emphasizes the ability to recognize and manage emotions — both one’s own and those of others — which standard reasoning tests rarely assess. A person might excel at abstract puzzles while struggling to navigate social situations, understand how others feel, or manage interpersonal relationships effectively.

It is also useful to distinguish intelligence from wisdom. Intelligence involves learning, reasoning, and problem-solving capabilities. Wisdom emphasizes sound judgment, accumulated experience, and acting in ways that benefit oneself and others over the long term. These qualities can exist somewhat independently. Someone can demonstrate exceptional intelligence in specific domains — solving complex mathematical problems or mastering strategic games — while making poor life decisions that show limited wisdom. Conversely, someone with less formal education or lower scores on abstract reasoning tests might offer profound guidance based on lived experience and deep understanding of human nature.

What is artificial intelligence?

The Merriam-Webster dictionary defines artificial intelligence (AI) as “the capability of computer systems or algorithms to imitate intelligent human behavior.”

In practical terms, this means designing computational systems that can handle tasks typically associated with human intelligence. These tasks include understanding natural language (interpreting sentence meaning or responding appropriately to questions), recognizing patterns in speech or images (identifying objects in photographs or transcribing spoken words into text), solving complex problems (finding optimal routes through networks or diagnosing medical conditions from symptoms), and making decisions (recommending products based on preferences or evaluating loan applications).

However, the definition of AI proves surprisingly slippery. As technology advances, what once seemed to require intelligence often becomes reclassified as “just software” or “just algorithms.” This moving boundary — where yesterday’s AI becomes today’s routine computation — is known as the AI effect, which we’ll examine more closely below.

Example: An algorithm for tic-tac-toe

To make these abstract ideas more concrete, consider the game of tic-tac-toe (Figure 2). Is a program that plays tic-tac-toe competently an example of artificial intelligence?

Author: Mazeo.

Here is a straightforward algorithm for playing as X:

- Start by placing an X in a corner square.

- If your opponent places an O in the opposite corner, choose a different free corner; otherwise, take the opposite corner.

- If you can win on this move (two Xs and an empty square forming a line), make the winning move.

- If your opponent is about to win (two Os and an empty square forming a line), block them.

- Otherwise, play in any free corner or, if no corners are available, a side square.

Following these rules consistently, you will never lose at tic-tac-toe, and you may win when opponents make mistakes. The algorithm performs its task well, yet it operates entirely through a predetermined set of instructions. It doesn’t learn from past games, adapt its strategy based on different opponents’ playing styles, or develop any deeper understanding of the game beyond following its programmed rules.

This example illustrates a central challenge in defining AI: some algorithms perform tasks that appear intelligent without exhibiting qualities we typically associate with intelligence — flexibility, learning, understanding, or generalization. The tic-tac-toe algorithm doesn’t truly understand the game in any meaningful sense. It simply executes a fixed set of instructions that happen to produce good moves.

Where exactly should we draw the line between “clever programming” and “artificial intelligence”? If we call the tic-tac-toe algorithm AI, would we also classify a thermostat as AI since it “responds” to temperature changes? If not, what distinguishes them? These questions reveal how difficult it is to define AI precisely.

The AI effect

The AI effect describes a paradox in how we perceive artificial intelligence: once a system reliably performs a task, people tend to stop calling it AI. As John McCarthy — one of the founders of the AI field — famously observed:

“As soon as it works, no one calls it AI anymore.”

This phenomenon occurs because our perception of intelligence remains closely tied to what seems difficult, mysterious, or beyond mechanical explanation. When we understand precisely how something works — when we can see the gears turning, so to speak — it loses its appearance of intelligence and becomes merely computation or automation.

Historical examples make this pattern clear:

Electronic calculators were once considered a form of artificial intelligence. When they first appeared in the mid-20th century, machines that could instantly perform complex arithmetic seemed to exhibit a kind of mechanical intelligence. Calculations that required significant mental effort from humans were completed in fractions of a second. Today, we simply see calculators as basic tools.

Optical character recognition (OCR) — machines reading printed or handwritten text — represented cutting-edge AI research throughout the 1960s and 1970s. The challenge of taking an image of text and converting it to machine-readable characters seemed to require sophisticated perception and interpretation. Now, OCR is built into smartphones and document scanners as a standard feature that users barely notice.

Chess programs were considered major AI achievements when they began defeating strong human players in the 1990s, culminating in IBM’s Deep Blue defeating world champion Garry Kasparov in 1997. Chess had long been viewed as a domain requiring deep intelligence — strategic thinking, pattern recognition, and planning. Today, chess engines running on smartphones can defeat any human player, yet few people think of them as “real” AI.

Spam filtering in email once seemed to require intelligence — understanding content, recognizing patterns, distinguishing legitimate messages from unwanted ones. Now it’s a background feature users expect (or.. hope) to work automatically.

The AI effect means the goalposts continually move. What counts as AI today — perhaps conversing in natural language or generating images from text descriptions — may be considered routine software in a decade when we understand the underlying mechanisms more completely and the technology becomes commonplace.

This creates a genuine definitional challenge. If “AI” refers only to tasks that still seem mysterious or impressive, then AI is always whatever we haven’t yet achieved, and the term becomes a moving target rather than a stable category.

Different definitions of AI

Because of this shifting boundary and the field’s inherent complexity, researchers and philosophers have proposed multiple definitions of AI that emphasize different aspects. A few influential definitions include:

-

“The science and engineering of making intelligent machines, especially intelligent computer programs.” — John McCarthy

-

“The science of making machines do things that would require intelligence if done by men.” — Marvin Minsky

-

“The capacity of computers or other machines to exhibit or simulate intelligent behaviour…especially by using machine learning to extrapolate from large collections of data.” — Oxford English Dictionary

These definitions differ in meaningful ways. McCarthy’s definition emphasizes the engineering aspect — AI as the practical discipline of building intelligent machines. Minsky’s formulation focuses on capability — machines doing things that would require intelligence if humans did them. The OED definition highlights modern methods, particularly machine learning and the role of data in enabling intelligent behavior.

Each definition captures something important while leaving room for interpretation. McCarthy’s engineering focus acknowledges that AI is not just theoretical but involves building actual working systems. Minsky’s emphasis on mimicking human capabilities suggests the bar for AI is “human-level performance” on intelligent tasks. The OED’s inclusion of “exhibit or simulate” acknowledges the philosophical question of whether machines truly possess intelligence or merely appear to.

Because of the AI effect and these definitional challenges, many introductions to AI rely on examples rather than strict definitions. By examining what AI systems can do — playing chess at world-champion level, driving cars autonomously, answering questions conversationally, diagnosing diseases from medical images, translating between languages — we get a practical sense of the field even if the boundaries remain somewhat fuzzy.

This pragmatic, example-based approach has limitations. It makes AI a somewhat circular concept: AI is whatever AI systems do.

Weak AI versus strong AI

Researchers commonly distinguish between weak AI and strong AI, representing fundamentally different levels of capability and ambition:

Weak AI (also called narrow AI) refers to systems designed and trained for specific, well-defined tasks within bounded domains. Examples include programs that play tic-tac-toe or chess, systems that recognize faces in photographs, voice assistants that answer particular types of questions, algorithms that translate text between specific languages, or applications that recommend products based on purchase history.

These systems can excel at their designated tasks, sometimes surpassing human performance within their narrow domains. However, they cannot meaningfully transfer their abilities to other areas. A face recognition system, no matter how accurate, cannot suddenly start playing chess, writing poetry, or diagnosing medical conditions. Its “intelligence” is entirely confined to its training domain. The system has no general understanding that would allow flexible application to new problems.

Strong AI (also called general AI or artificial general intelligence, abbreviated as AGI) describes a hypothetical system that could match or exceed human intelligence broadly across domains. Such a system would not just excel at predefined tasks but could learn any task that humans can learn, reason flexibly across entirely different domains, transfer knowledge between areas, and potentially possess consciousness or self-awareness.

A strong AI system could, in principle, teach itself new skills through experience, understand context deeply enough to operate effectively in novel situations without specific training, and exhibit the kind of flexible, general-purpose intelligence that humans possess. It would not be limited to narrow domains but could engage with the open-ended complexity of the real world.

All progress in AI to date has been in weak AI. Despite impressive advances — systems that can converse naturally, generate realistic images, write code, or drive cars — every current AI system remains fundamentally narrow. Large language models like those powering modern conversational AI can handle many tasks but still operate within the constraints of pattern recognition and statistical prediction based on training data. They don’t truly understand in the way humans do, cannot reliably reason about entirely novel situations beyond their training distribution, and lack the flexible, general-purpose intelligence that would constitute strong AI.

Strong AI remains speculative and may require fundamentally different approaches than current methods based on statistical learning from large datasets. Whether strong AI is achievable, and if so, when it might be achieved, remains a matter of active debate among researchers.

Chinese Room argument

Philosophers have raised fundamental critiques of whether strong AI is even possible in principle. John Searle’s famous Chinese Room argument (1980) challenges the idea that manipulating symbols according to rules — which is essentially what computers do — can ever constitute genuine understanding.

In Searle’s thought experiment, imagine a person who doesn’t speak Chinese sitting alone in a room with a comprehensive rulebook for matching Chinese characters. People outside the room pass in questions written in Chinese. The person inside follows the rulebook to match characters and produces responses in Chinese that are passed back out. To observers outside, it appears the room “understands” Chinese — questions go in, appropriate answers come out. Yet the person inside the room doesn’t understand Chinese at all; they’re simply following mechanical rules for symbol manipulation.

Searle argues that computers are fundamentally similar to the person in the room. They manipulate symbols (data) according to rules (programs), and they can produce outputs that appear intelligent. However, this doesn’t mean they genuinely understand anything. Understanding, Searle suggests, requires more than symbol manipulation — perhaps it requires consciousness, intentionality, or subjective experience that emerges from biological processes but not from computational ones.

Whether Searle’s argument succeeds remains controversial. Some philosophers argue that understanding might be a matter of sufficiently sophisticated information processing, regardless of implementation — that if a system behaves indistinguishably from an understanding system, we should credit it with understanding. Others defend Searle’s position that genuine understanding requires something beyond computation. This debate touches on deep questions about consciousness, meaning, and the nature of mind that remain unresolved.

While strong AI remains theoretical and its possibility remains philosophically contested, it has been a prominent theme in science fiction for decades.

In 2001: A Space Odyssey, the HAL 9000 computer demonstrates human-like reasoning, decision-making, and even emotional responses, raising questions about machine consciousness, loyalty, and what happens when an intelligent system’s priorities conflict with human needs.

In the Star Wars universe, the droid R2-D2 exhibits characteristics associated with strong AI — flexible problem-solving across varied situations, apparent loyalty and emotional connection to other characters, and distinct personality. Interestingly, R2-D2 communicates only through beeps and whistles, yet other characters understand this “language” perfectly, suggesting either exceptional machine intelligence or exceptional human-machine understanding.

Fictional portrayals of AI range from utopian visions of helpful, benevolent artificial minds to dystopian scenarios of conflict between humans and machines. These narratives reflect both hopes and fears about what AI might become. While they don’t predict actual technological developments, they help us explore ethical questions, imagine possible futures, and think through implications of creating minds different from our own.