Asset Management and Optimization

Learning Objectives

- You know of common asset management and optimization techniques, including lazy loading, code splitting, minification, and compression.

- You understand the underlying principles of these techniques and how they can be applied to improve performance and user experience.

At the end of the last chapter, we discussed hybrid rendering. Our example showcased an application that rendered a page with one hundred items on the server and then fetched and rendered the remaining items on the client. Fetching the remaining items was initiated as soon as the HTML was parsed into DOM — the items would be retrieved regardless of whether the user scrolled down to view them.

This is where asset management and optimization come into play — we can make informed decisions about when and how to load assets to improve performance and user experience.

Lazy Loading

Lazy loading defers the loading of non-critical resources until they are needed, which reduces the initial load time and conserves bandwidth. In terms of content shown on a site, lazy loading can be applied to practically any asset, including images, videos, JavaScript modules, and third-party scripts.

The starting point of lazy loading is often to identify assets that are not immediately required for the initial render of the page. For example, images below the fold, JavaScript modules that are only needed after user interaction, or third-party scripts that are not essential for the initial page load.

The term fold refers to the part of the webpage that is visible to the user without scrolling. Content that is not visible to the user without scrolling is considered below the fold.

As a concrete example, we could modify our earlier hybrid rendering example to lazy load the remaining items only when the user scrolls down to view them. This would prevent unnecessary data fetching and rendering. Concretely, this would be implemented with the Intersection Observer API that allows detecting when items are about to enter the viewport and call a function when this happens.

Concretely, we would add a new item to the list, Loading..., that would be used to identify when the user has scrolled to the end of the list.

<ul id="list">

${items.map((item) => `<li>${item.name}</li>`).join("")}

<li id="last">Loading...</li>

</ul>Then, we would extract the function used for retrieving and rendering the remaining items into a separate function, loadRemaining. The function would also remove the Loading... item from the list.

const loadRemaining = async () => {

const list = document.getElementById("list");

const items = await fetch("http://localhost:8000/items/remaining");

const json = await items.json();

document.getElementById("last")?.remove();

for (const item of json) {

const li = document.createElement("li");

li.textContent = item.name;

list.appendChild(li);

}

};Finally, the concrete interaction observer would be created when the page is loaded. The observer would watch the last item and call the loadRemaining function when the item is about to enter the viewport. As observers continue to observe elements even after they have been removed from the DOM, we would also remove the last item from the list when the loadRemaining function is called.

document.addEventListener("DOMContentLoaded", () => {

const observer = new IntersectionObserver((entries, obs) => {

if (entries[0].isIntersecting) {

loadRemaining();

obs.disconnect();

}

});

observer.observe(document.getElementById('last'));

});As a whole, the updated /hybrid route would look as follows.

app.get("/hybrid", async (c) => {

const items = await getInitialItems();

return c.html(`<html>

<head>

<script>

const loadRemaining = async () => {

const list = document.getElementById("list");

const items = await fetch("http://localhost:8000/items/remaining");

const json = await items.json();

document.getElementById("last")?.remove();

for (const item of json) {

const li = document.createElement("li");

li.textContent = item.name;

list.appendChild(li);

}

};

document.addEventListener("DOMContentLoaded", () => {

const observer = new IntersectionObserver((entries, obs) => {

if (entries[0].isIntersecting) {

loadRemaining();

obs.disconnect();

}

});

observer.observe(document.getElementById('last'));

});

</script>

</head>

<body>

<ul id="list">

${items.map((item) => `<li>${item.name}</li>`).join("")}

<li id="last">Loading...</li>

</ul>

</body>

</html>`);

});Now, the remaining items would only be fetched and rendered when the user scrolls down to view them — that is, they would be lazily loaded when they are needed.

Infinite scrolling mechanisms use lazy loading to load additional content as the user scrolls down. In infinite scrolling, new content is loaded when the user reaches the end of the current content.

The new content would, however, be loaded in smaller batches, and the observer would not be disconnected after new content has been loaded. Instead, the element for the end of the current content would be moved further down, and the observer would continue to observe the new mark.

Code Splitting

While our earlier examples have been simple, real-world applications often consist of a large codebase. As an example, the 2024 Web Almanac states that the median JavaScript amount for a page is over 500 kilobytes, while the top 10% — in terms of heaviest JavaScript — have more than 1.7 megabytes of JavaScript loaded to the client. Such large files can lead to slow load times and poor user experience. Often, much of the code is also not needed.

Code splitting is a technique focusing on dividing the codebase into smaller, on-demand chunks. Common approaches for dividing the codebase include dividing the code based on the entry points (i.e., paths), based on third-party vendor, and based on whether the code is shared and used across the application or not. More fine-grained approaches include also splitting the code even at the level of individual components, modules, or events.

Let’s again continue with our hybrid rendering example. We could split the codebase into two parts: one part that is needed for the initial render and one part that is needed for the lazy-loaded items. The initial render would include first one hundred items and the code for loading and executing the code needed for loading remaining items. Concretely, loading the code for loading the remaining items would be done with dynamic imports.

Now, we would extract the code for loading the remaining items into a separate file. Create a file called loadRemaining.js and place it to the server/public folder that is served as static files.

const loadRemaining = async () => {

const list = document.getElementById("list");

const items = await fetch("http://localhost:8000/items/remaining");

const json = await items.json();

document.getElementById("last")?.remove();

for (const item of json) {

const li = document.createElement("li");

li.textContent = item.name;

list.appendChild(li);

}

};

export { loadRemaining };Then, we would modify the /hybrid route to load the loadRemaining.js file with a dynamic import. The dynamic import would load the file only when the user scrolls down to view the remaining items. The dynamic import is an asynchronous function, so we add a then chain function that calls the loadRemaining function when the file has been loaded.

app.get("/hybrid", async (c) => {

const items = await getInitialItems();

return c.html(`<html>

<head>

<script>

document.addEventListener("DOMContentLoaded", () => {

const observer = new IntersectionObserver((entries, obs) => {

if (entries[0].isIntersecting) {

import("/public/loadRemaining.js").then((module) => {

module.loadRemaining();

});

obs.disconnect();

}

});

observer.observe(document.getElementById('last'));

});

</script>

</head>

<body>

<ul id="list">

${items.map((item) => `<li>${item.name}</li>`).join("")}

<li id="last">Loading...</li>

</ul>

</body>

</html>`);

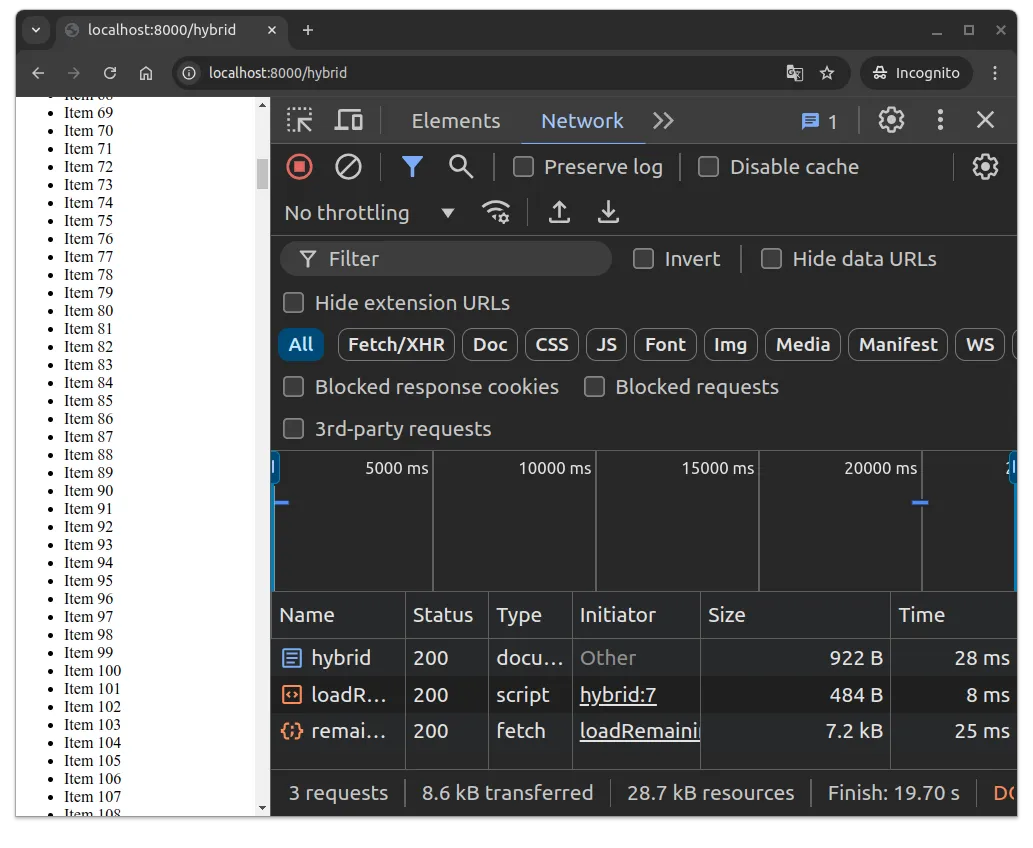

});Now, the code for loading the remaining items is only loaded when the user scrolls down to view them. This reduces the initial payload and improves load times. In our specific case, there’s now however two requests to the server when we scroll to the area that triggers the event observer. The first request is for the JavaScript file, and the second request is for the data.

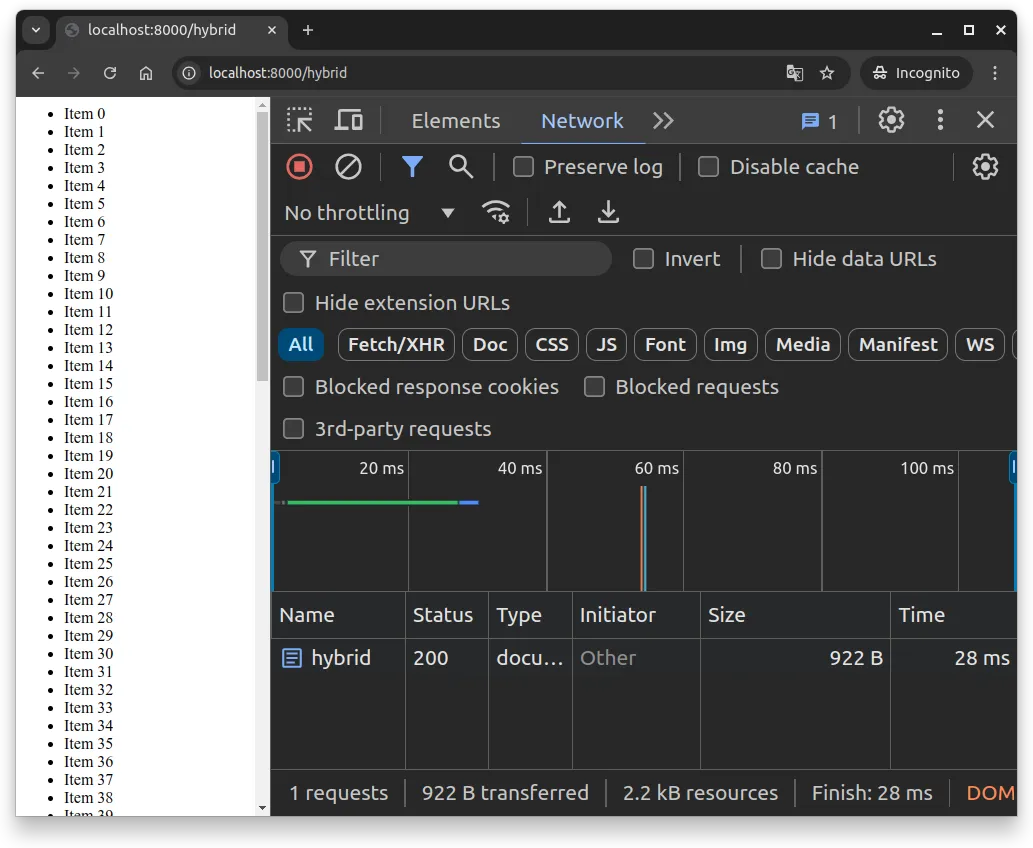

This can also be concretely looked at using the Network tab in the browser’s developer tools. When the page is loaded, the request is made to the /hybrid route, as shown in Figure 1 below.

However, when the user scrolls down to view the remaining items, a new request is made to the server to fetch the JavaScript file, which then loads the remaining items. This is shown in Figure 2 below, where the Network tab now shows the subsequent requests as well.

Bundling, Minification, and Compression

There are several other techniques that can be used to optimize assets. These include bundling, minification, and compression.

Bundling refers to combining multiple JavaScript modules and CSS files into a single file to reduce the number of HTTP requests. As an example, if a page needs the contents from multiple files at the same time, it can be more efficient to combine these files into a single file. This reduces the number of requests needed to load the page, which can improve the load time.

As a very classic example of bundling from some time ago, images that a site uses could be combined into a single image file, and the site would then use CSS to control which part of the bigger image would be shown and where. This was especially useful when the site used many small images, e.g. when using images for styled buttons, as the overhead of loading each image separately could be significant.

Minification is the process of removing unnecessary characters from code without impacting its functionality. This includes removing whitespace, comments, and other characters that are not needed for the code to run. Minification reduces the size of the files, which can improve load times.

Minification can also include dead code elimination (DCE), which removes code that is never executed. This can be especially useful in larger codebases where there might be code that is no longer used. DCE can also be used as a separate task for improving the codebase — see e.g. Dead Code Removal at Meta: Automatically Deleting Millions of Lines of Code and Petabytes of Deprecated Data.

Compression involves using algorithms to reduce the size of files. This can be done for various types of assets, including JavaScript, CSS, and images. When making a request to a server, browsers typically include an Accept-Encoding header that specifies the compression algorithms they support. If the server has a corresponding algorithm enabled, or it has the corresponding files that match the encoding, it can respond with the compressed version of the file. The browser can then automatically decompress the content when it is received.

Compression can be done using algorithms like Brotli for text content, while images are often compressed by transforming them to new image formats such as WebP. Load balancers like Traefik can also take responsibility of compressing some content, see e.g. Traefik’s compression middleware.

In addition, it is also possible to create multiple versions of media files that are optimized for different screen sizes and resolutions — as an example, it does not make sense to load a high-resolution image on a mobile device with a 720p screen.

Designing applications that work on multiple devices is discussed in more detail in the course Device-Agnostic Design.

Frameworks to the rescue

In this chapter and the previous chapter, we discussed rendering approaches and ways to optimize client-side performance. We intentionally used vanilla JavaScript and simple examples to illustrate the concepts. In practice, many modern web frameworks and libraries provide built-in support for the discussed techniques.

For a brief review, see e.g. Overview of Web Application Performance Optimization Techniques.

In the next chapter, we’ll introduce Astro, a modern framework that combines static site generation with dynamic rendering capabilities, and that comes with many of the optimizations discussed in this chapter built-in. We’ll walk through building a web app with Astro and Svelte, and discuss how Astro handles asset management and optimization.